On a periodic basis, several organizations administer international exams for the purpose of comparing primary and secondary students to their international counterparts. Most notable of these exams are the Programme for International Student Assessment (PISA), organized by the OECD, and the Trends in Mathematics and Sciences Study (TIMSS), managed by the International Association for the Evaluation of Educational Achievement (IEA). These exams offer policy makers the opportunity to assess the effectiveness of national education systems with respect to international competition.

International assessments of this nature are a relatively new development beginning with the creation of TIMSS in 1995 and PISA in 1997. Since then, each exam has been administered cyclically: PISA every three years and TIMSS every four. Both exams test subjects such as mathematics, science and reading comprehension. However, the two exams vary in the countries, age groups and types of questions used in their surveys.

Despite differences between the two tests, the latest exam data, drawn from 2011 and 2012, offer generally consistent results. PISA 2012 placed Shanghai, which participated in the survey independent of China as a whole, as the world leader in education. Other top achievers consisted primarily of several East Asian and Western European nations such as Singapore, Hong Kong, South Korea and Finland. The United States scored only marginally above the international average, while countries with lower levels of economic development expectedly attained the worst results. TIMSS 2011 generally corroborated these findings, with most countries obtaining similar positions.

These international exams have a noticeable influence on the education policies of participating nations. The information put forth by exam data serves as an indicator of how well a nation’s students may perform relative to their international counterparts. To countries looking to increase or maintain their current levels of international prestige, the possession of a highly skilled student base is imperative. As domestic benchmarks alone fail to place the performance of students in an international context, international exam results are essential for any standardized international comparison.

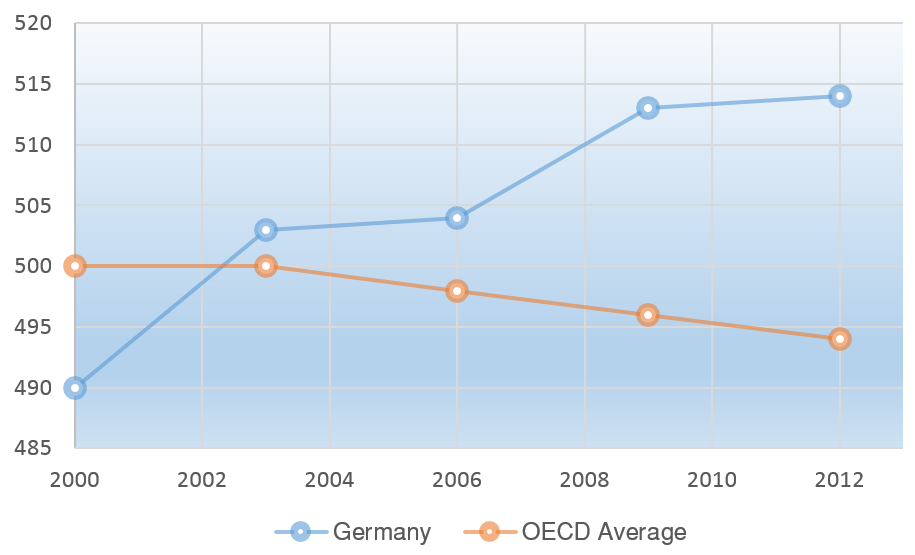

The impact of these tests and the fear of “falling behind” can be exemplified by Germany’s reaction to the 2000 PISA exam. Results placed Germany well below the OECD average, exposing major weaknesses in what Germans had long regarded as one of the world’s premier education systems. The exam results and the resultant jolt to public opinion precipitated education reforms centered on increasing opportunities for minorities, lengthening school days and strengthening teaching requirements. On subsequent exams, Germany demonstrated significant improvement; in 2012, German scores far surpassed the OECD average. Though improvements may have been the product of other factors (e.g., continued East German assimilation), these reforms have been hailed as largely successful.

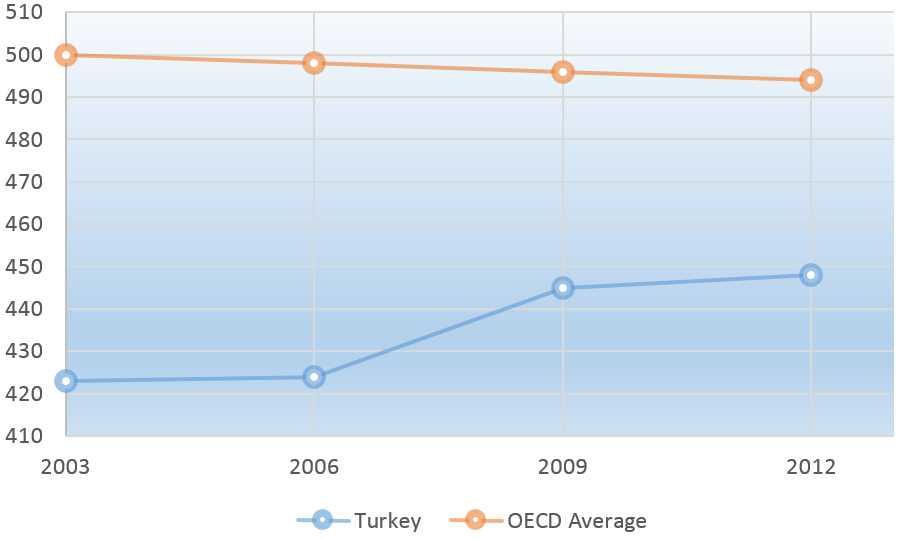

The above chart how longitudinal data can be used to evaluate a country’s ability to improve its education systems. Turkey, whose scores have improved at a rate exceeding that of nearly every other country surveyed, is an example of this application. Despite consistently garnering among the poorest scores on PISA, Turkey succeeded in narrowing the gap between itself and its European neighbors. Complementing the country’s progress is the fact that its scores now exceed the OECD average when adjusted for per capita GDP. Improvements may be attributed to a combination of deliberate changes in education policy such as a near three-fold increase in education spending or Turkey’s significant strides in poverty reduction.

Public attention to scores is perhaps most apparent in the United States, where each release of exam data is typically followed by popular outcry regarding the US’s usually low rankings and statements from officials promising reform. Unsurprisingly, exam results have thus served as a major justification for new nationwide education initiatives. The Common Core Curriculum, a recent and major education reform effort sponsored by the National Governor’s Association and the White House, is advocated for on the basis of improving the US’s international standing. In fact, much of the Common Core Curriculum has been designed specifically to improve the US’s PISA scores.

But to an extent, exam results have the potential to become problematic if they gain undue influence over education programs. Martin Carnoy and Richard Rothstein of the Economic Policy Institute argue that international exams such as TIMSS and PISA fail to account for high levels of poverty and wealth inequality in the US relative to other economically advanced countries, making the US’s poor results misleading. The two contend that the US’s education system, adjusted for inequality, is sufficiently functional and they caution against radical reform efforts. Other observers have linked exam “hysteria” to formerly failed policies that emphasized standardized testing, most notably the No Child Left Behind Act of the Bush administration.

Additionally, for many Americans, test results placing Shanghai and Hong Kong in top spots on the global education spectrum have had the effect of a second Sputnik, spurring a sense of alarming decline at the hands of an international rival. Excessive attention to Chinese results exacerbate an unnecessary but persistent sense of rivalry between the US and China. This hostile mentality may not only be harmful to cooperation, but is severely misguided. To use the results of two highly advanced cities as representative of the entirety of China is analogous to applying the results of Manhattan alone (which would undoubtedly far exceed the US average) to declare the United States the world’s leader in education.

The significance of exam results is further diminished when different exams offer conflicting pieces information. Though studies have demonstrated a correlation between results on the PISA and TIMSS exams, significant differences still persist. For instance, Malaysia, which scored far below the United States on the 2012 PISA, had a slightly better performance on the 2011 TIMSS. The opposite observation is true for New Zealand, which outperformed the US on PISA but underperformed on TIMSS. A likely explanation for these occurrences is that discrepancies in relative score between the two exams may be a reflection of a nation’s education philosophy and curricula. This puts education analysts in a dilemma where they lack the ability to determine which exam results or school curricula are objectively better.

Though these several underlying issues may undermine the usefulness of exam results, it’s undeniable that in many cases the PISA and TIMSS exams offer meaningful insight into the performance of national education systems, which is why it’s no surprise that an increasing number of countries opt to take them. With 40 countries surveyed in the 1999 TIMSS report to over 60 in 2015, and 32 countries surveyed in the 2000 PISA to nearly 70 in 2015, the importance of international exams seems likely to only amplify. But, as with many pieces of data, exam scores lack the ability to provide a fully comprehensive portrayal of an education system, and must therefore be applied to policy decisions with discretion.

The views expressed by the author do not necessarily reflect those of the Glimpse from the Globe staff, editors, or governors.